When operating Kubernetes, there are moments when you think, “Huh? Why is this appearing here?” The most common misconception is the belief that “Pods will be distributed evenly (Round-Robin) across nodes.”

Today, through the ‘Pod concentration phenomenon’ I personally experienced, I will delve into the invisible logic of how the Kubernetes scheduler actually scores and selects nodes. 🚀

0. Problem Situation: “There are clearly multiple nodes, but…”

For testing, I created MySQL, Nginx, and httpd pods in sequence. Our expectation was naturally, “They’ll be nicely divided among Node 1, Node 2, Node 3… right?”

But reality was different.

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

mysql 1/1 Running 0 66m 10.16.2.12 gke-cluster-1-default-pool-rr03

nx 1/1 Running 0 16m 10.16.2.13 gke-cluster-1-default-pool-rr03

nx1 1/1 Running 0 6s 10.16.2.14 gke-cluster-1-default-pool-rr03

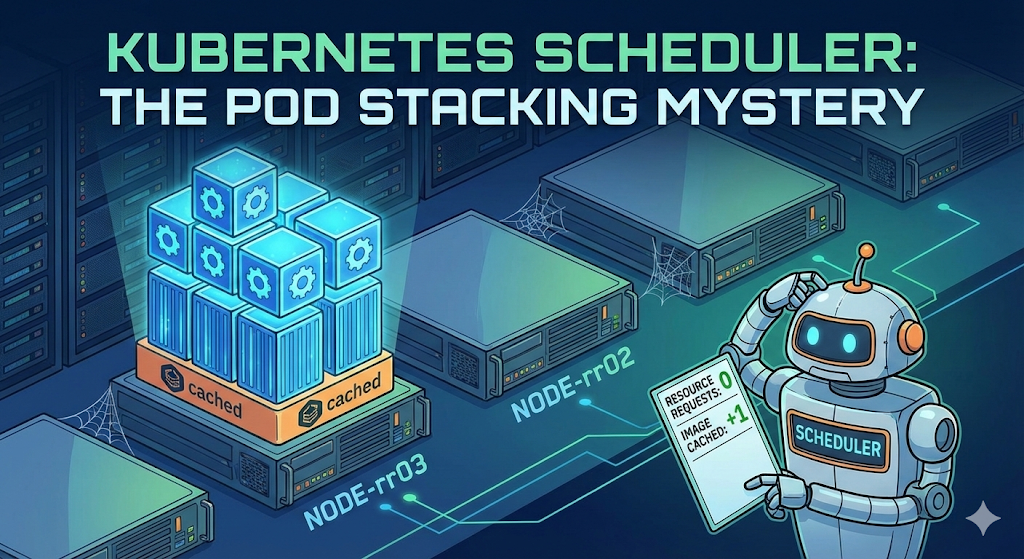

httpd 1/1 Running 0 4s 10.16.2.15 gke-cluster-1-default-pool-rr03 As you can see, mysql, nx, and even httpd (a different image) were all continuously created on a single node called rr03. While other nodes were completely empty! 😱

Why is this happening? Is the scheduler broken?

1. Clearing Misconceptions: The Scheduler doesn’t know ABC order ❌

Many people think that because the scheduler uses a ‘Round-Robin’ method, it will distribute pods in node name order (A->B->C). However, the Kubernetes scheduler operates strictly based on ‘Score’.

- Filtering: Eliminate nodes that don’t meet conditions

- Scoring: Assign scores to remaining nodes (0~100 points)

- Ranking: Select the top-ranked node

In other words, the reason rr03 node was continuously selected was not due to order, but because rr03 consistently appeared as the “top candidate” in the scheduler’s eyes.

2. Culprit Analysis: Why did the full node become the top choice? 🤔

The reason an already-full node with 3 pods became the top choice, despite empty nodes being available, is a combination of two settings.

① Resource Requests Not Set = Treated as “Invisible”

I did not set resources.requests (CPU/Memory request amounts) when creating the pods.

- Scheduler’s perspective: “This pod uses 0 CPU, 0 memory?”

- Judgment: Whether there are 100 or 1000 pods on the rr03 node, if only pods without Request settings are present, the scheduler considers the node’s utilization to still be ‘0%’.

- Result: “An empty node or rr03, both are equally spacious anyway? (Resource score tie)”

② The Decisive Blow: Image Locality & Layer Sharing

This raises a question: “If scores are tied, shouldn’t it go randomly? Why specifically rr03?”

The culprit is the ImageLocality bonus score.

- When deploying Nginx: It’s faster to go to the node where the image was already downloaded for mysql, so rr03 is chosen (understandable)

- When deploying Httpd: “Huh? This is a new image?”

- But Docker images have a Layer structure.

- mysql, nginx, and httpd are different, but they are highly likely to share Base Images (Debian, Alpine, etc.) or common library layers underneath.

- Final Verdict:

- Empty node: “Must download all base layers from scratch” -> No bonus score

- rr03 node: “Oh? The base layers used by the neighbors are already here? What a gain!” -> Bonus score granted (+)

Ultimately, while the resource scores were tied (0-point impact), rr03 slightly edged ahead in image caching scores, becoming a black hole that continuously sucked in pods. 🕳️

3. Solution: How to spread pods out? 🛠️

How can we prevent this phenomenon and distribute pods evenly across the entire cluster?

✅ Method 1: Explicitly specify Resource Requests (Recommended)

Give your pod a name tag saying, “I’m this big!”

resources:

requests:

cpu: "200m" # Request 0.2 coreThis way, the scheduler will correctly determine, “Ah, rr03 is already full. I should send it to an empty node!” and the load balancing (Least Allocated) logic will work.

✅ Method 2: Set Anti-Affinity

This method forcibly sets, “I don’t want to be where someone like me is!”

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector: ...Using this option ensures that pods are placed without overlapping, regardless of score calculation.

4. Conclusion 📝

- The Kubernetes scheduler does not deploy in ABC order.

- If requests are not set, the scheduler considers the pod’s size to be ‘0’.

- In this case, a node with even a small amount of image layers already present can receive a bonus score, causing pods to concentrate in one place.

- For stable operation, setting Resource Requests is not an option, but a necessity!

That’s it for today’s struggle! I hope this helped you understand the mind of the high-nosed scheduler. 👋

Leave a Reply