Hello! Today, we’re going to talk about the “unfamiliar IP ranges” you might encounter at least once when dealing with cloud-native environments.

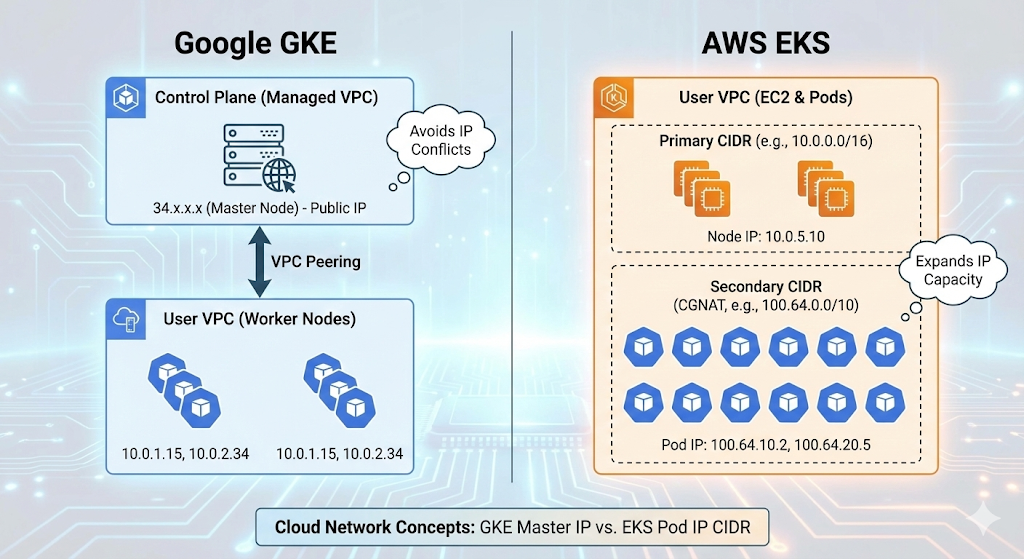

When operating Kubernetes, you might sometimes wonder, “Huh? Our company’s VPC range is 10.0.0.0/16, but why is a 34.x.x.x showing up?” or “Why are AWS EKS pod IPs 100.64.x.x?”

These two cases arise for different reasons, but ultimately, they share the common theme of structural limitations and IP resource management in cloud networks. Let’s dive deep into how GKE and EKS handle networking respectively! 🚀

1. The Secret of GKE (Google Kubernetes Engine) and the 34.x.x.x Range 🇬

“My cluster is private, so why does the master node use a public IP?”

When you create a GKE cluster and run kubectl cluster-info, you’ll see the master (Control Plane)’s address assigned as a Google public IP like 34.118.x.x.

🏗️ Structural Reason: Separation of Management Domains

GKE has a unique architecture.

- User VPC (Worker Nodes): This is the domain you pay for and control. Actual pods run here.

- Google Managed VPC (Control Plane): The master node exists in a separate project/VPC managed by Google.

These two networks are physically isolated from each other and connected via VPC Peering for communication.

🧐 Why a Public IP (34.x) Specifically?

Here lies the dilemma. What if Google used private IP ranges like 10.x or 192.168.x for the master nodes?

If a user created their VPC with the same 10.x range, an IP conflict (Overlap) would occur, making peering impossible.

Therefore, Google assigned a public IP range (like 34.x, owned by Google) that “won’t conflict anywhere in the world” to the master nodes.

💡 Key Takeaways

- Conflict Prevention: To ensure connectivity regardless of the private IP range the user’s VPC uses.

- Accessibility: Serves as an endpoint for developers to issue

kubectlcommands from outside (the internet). - Security: Even with the Private Cluster option enabled, this public IP range is used internally for routing, such as tunneling between the master and worker nodes.

2. The Identity of AWS EKS and 100.64.x.x (Secondary CIDR) 🅰️

“I’m running out of VPC IPs! I need a dedicated range for pods!”

When operating AWS EKS, you’ll often see pod IPs assigned to the 100.64.x.x range. This is closely related to the characteristics of the AWS VPC CNI plugin.

😱 The Problem: IP Exhaustion

AWS VPC CNI assigns a VPC’s actual IP address to each individual pod. While it offers top performance, it consumes IPs at an incredible rate.

For example, even if you create a VPC with 10.0.0.0/16 (65,536 addresses), after splitting subnets and launching resources like EC2, ELB, and RDS, you’ll quickly run out of IPs for thousands of pods.

🛠️ The Solution: Adding a Secondary CIDR

The technique used here is to attach a Secondary IP range (Secondary CIDR) to the VPC. The most recommended range for this is the 100.64.0.0/10 range.

🧐 What is 100.64.x.x? (CGNAT)

This range is defined in RFC 6598 as the CGNAT (Carrier Grade NAT) range.

- Not a Public IP: It is not routed on the internet.

- Not a Standard Private IP (10.x, 192.168.x): The probability of conflict with ranges commonly used in corporate internal networks is very low.

In essence, it’s a “convenient, conflict-free reserved range for private network expansion.” EKS assigns this range exclusively for pods, separating the IP range used by nodes (EC2) from the IP range used by pods, thus solving the IP exhaustion problem.

💡 Key Takeaways

- IP Acquisition: Allows for the creation of an almost infinite number of pods beyond the limits of the existing VPC bandwidth.

- Ease of Management: Clear separation between node IPs and pod IPs simplifies management.

- Standard Compliance: Utilizes the CGNAT range to avoid private IP conflicts.

🥊 A Quick Comparison

| Category | GKE (Google) | EKS (AWS) |

|---|---|---|

| Observed IP | 34.118.x.x (Example) | 100.64.x.x |

| IP Type | Public IP | CGNAT IP (Shared Address) |

| Target Resource | Control Plane (Master Node) | Pod (Inside Worker Node) |

| Purpose of Use | IP Conflict Prevention with User VPC and external access | Solving VPC IP Exhaustion and securing a dedicated range for pods |

| Network Structure | Google Managed VPC ↔ User VPC Peering | Secondary CIDR expansion within a single VPC |

—

📝 Conclusion

When using the cloud, it can be confusing to see “IPs you didn’t configure” appear. However, behind the scenes, there are deep considerations by Cloud Service Providers (CSPs) for conflict prevention and scalability.

- GKE’s 34.x: Rest assured, it’s the Google-managed domain, so think “Ah, that’s the master node!”

- EKS’s 100.64.x: You can think of it as an “expansion slot” to solve the pod IP shortage.

Understanding these differences will allow you to respond much more flexibly when designing hybrid or multi-cloud networks! 👍

If this was helpful, please give it a like! 👏

Leave a Reply